25+ natural language processing with transformers github

Polyglot - Natural language pipeline supporting hundreds of languages. Natural Language Processing with Transformers Course 24 Hours of Live and Interactive Sessions by NLP master practitioners.

Github Recommendation Engine Stared Descriptions Csv At Master Jaimevalero Github Recommendation Engine Github

29 - Code - Keyword extraction with Sentence Transformers and.

. Contribute to ymcuiChinese-ELECTRA development by creating an account on GitHub. Traditional search systems use keywords to find data. 28 - Theory - Keyword extraction with Sentence Transformers and diversity with MMR and Max Sum Similaritymp4.

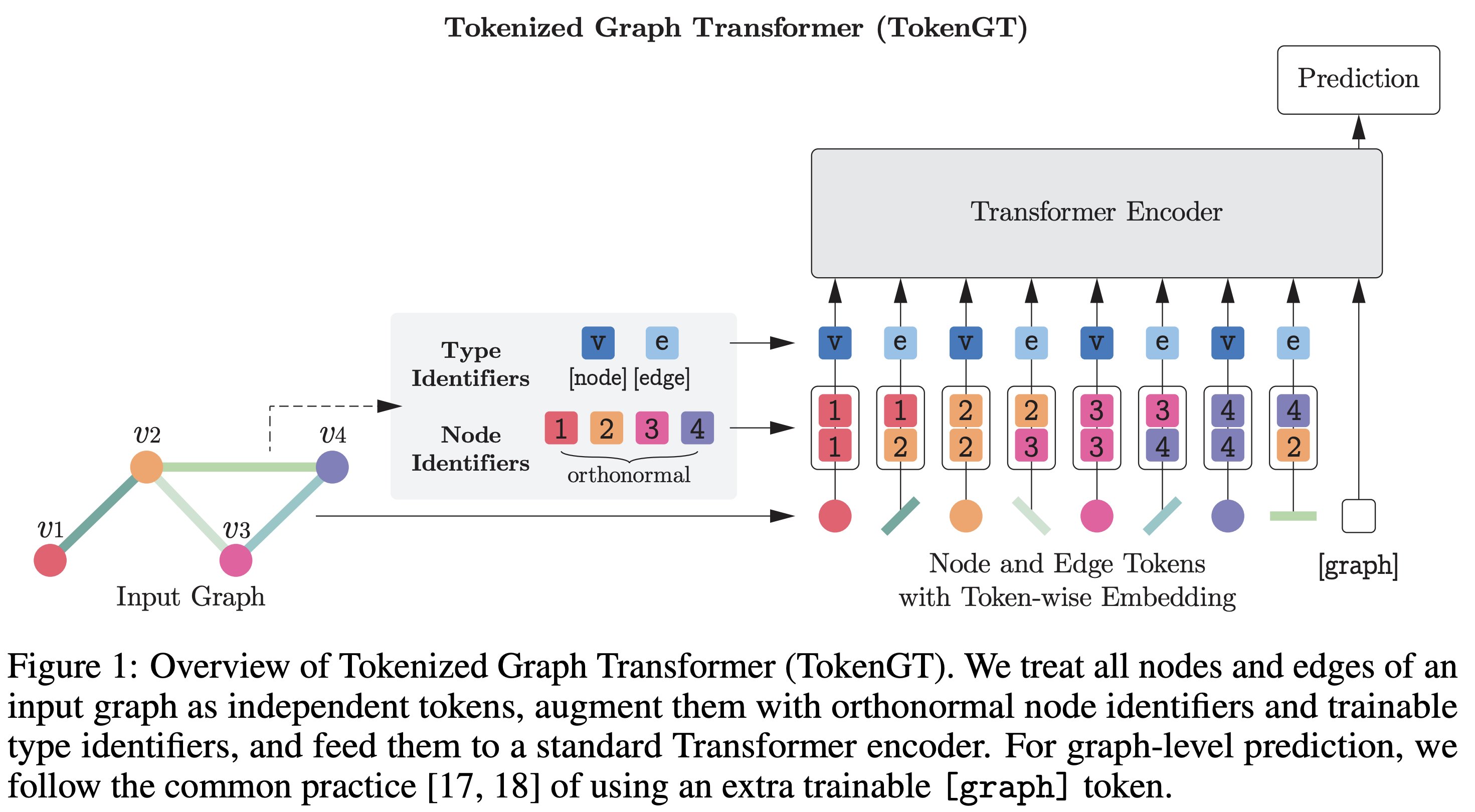

4-2Seq2SeqAttention Automatic convert from py to ipynb. At the cornerstone of the Transformer architecture is the multi-head attention MHA mechanism which models pairwise interactions between the elements of the sequence. Hugging Face Data Sets.

In the 1950s Alan Turing published an article that proposed a measure of intelligence now called the Turing. Natural Language Processing Tutorial for Deep Learning Researchers. Szegedy在14年的ICLR中 6 提出了对抗样本这个概念如上图对抗样本可以用来攻击和防御而对抗训练其实是对抗家族中防御的一种方式其基本的原理呢就是通过添加扰动构造一些对抗样本放给模型去训练以攻为守提高模型在遇到对抗样本时的鲁棒性同时一定程度也能提高.

Simple Transformer models are built with a particular Natural Language Processing NLP task in mind. One-line dataloaders for many public datasets. Repository to track the progress in Natural Language Processing NLP including the datasets and the current state-of-the-art for the most common NLP tasks.

8 21 Introduction to transformers 9 22 Scaled dot product attention 10 23 Multi-headed attention. Become an expert in Git and GitHub. Module 9 Assignment due.

Linux Essentials Certification. Each such model comes equipped with features and functionality designed to best fit the task that they are intended to perform. Given that languages can be used to express an infinite variety of valid sentences the property of digital infinity.

Inproceedingswolf-etal-2020-transformers title Transformers. TextBrewer is a PyTorch-based model distillation toolkit for natural language processing. Replacing RNNs in many problems in natural language processing speech pro-cessing and computer vision 827453431.

Updated Jul 25 2021. Its built on the very latest research and was designed from day one to be used in real products. TextBlob - Providing a consistent API for diving into common natural language processing NLP tasks.

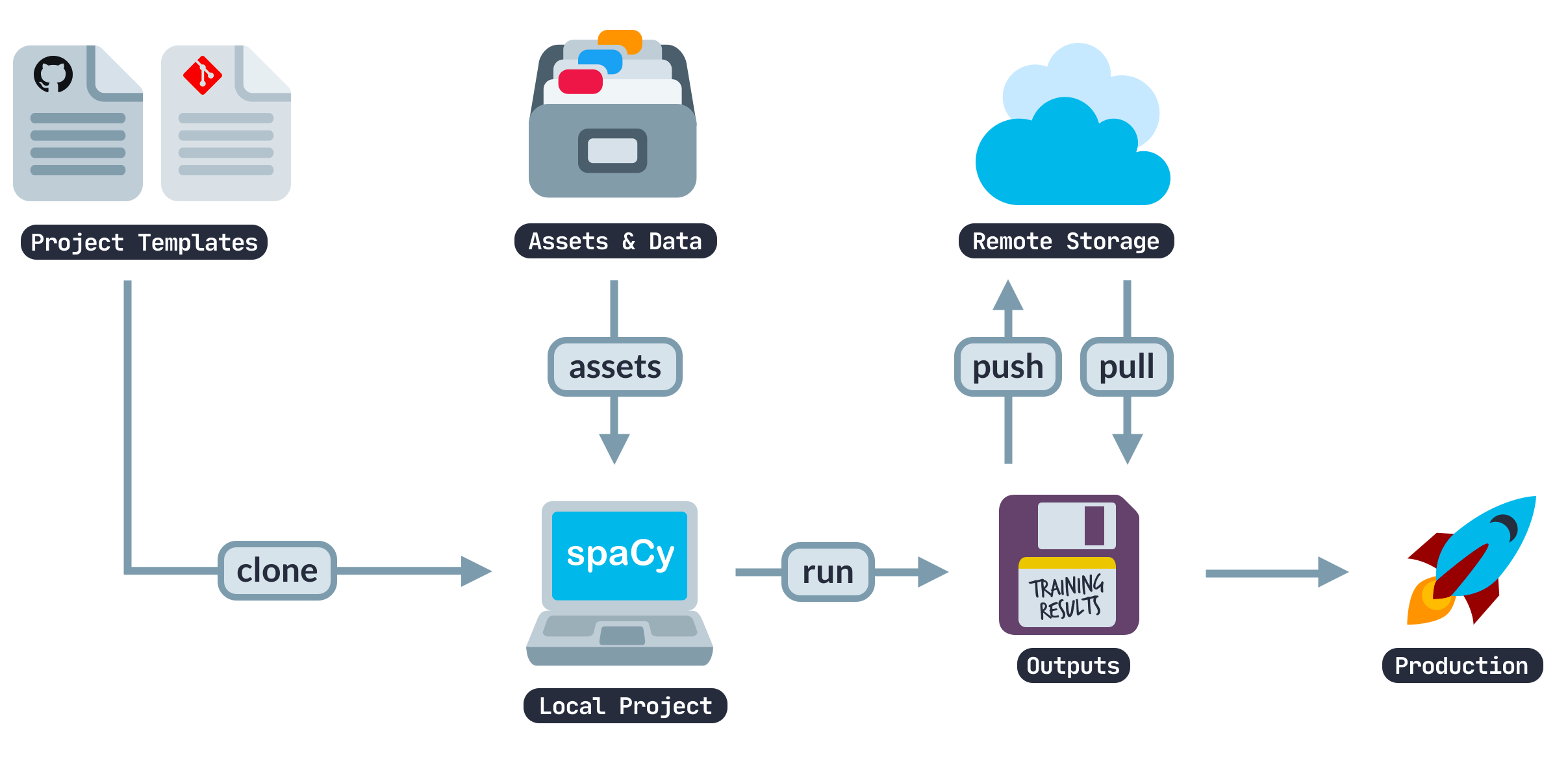

This repository contains the example code from our OReilly book Natural Language Processing with Transformers. English 简体中文 繁體中文 한국어. SpaCy comes with pretrained pipelines and currently supports tokenization and.

Text for tasks like text classification information extraction question answering summarization. Module 11 Week of 11212022. Backed by state-of-the-art machine learning models data is transformed into vector representations for search also known as embeddings.

This is important because it allows us to robustly detect the language of a text even when the text contains words in other languages eg. Semantic search applications have an understanding of natural language and identify results that have the same meaning not necessarily the same keywords. Bidirectional encoder representations from transformers BERT is a deep learning language model constructed using the encoder structure from the transformer 4.

Hola means hello in spanish. A language model is a probability distribution over sequences of words. Quepy - A python framework to transform natural language questions to queries in a database query language.

State-of-the-art Machine Learning for JAX PyTorch and TensorFlow. 45 START LEARNING. Langidpy - Stand-alone language identification system.

A language model gives us the probability of a sequence of words. Cui-etal-2020-revisiting title Revisiting Pre-Trained Models for Chinese Natural Language Processing author Cui Yiming and Che Wanxiang and Liu Ting and Qin Bing and Wang Shijin and Hu Guoping. It has tools for natural language processing machine learning among others.

Nltk - A leading platform for building Python programs to work with human language data. Allenai allennlp Star 112k. Transformers provides thousands of pretrained models to perform tasks on different modalities such as text vision and audio.

0250 Apr 23 2020 0249 Apr 22 2020 0248. Training a Model in Hugging Face. BERT has shown impressive.

Note that most chapters require a GPU to run in a reasonable amount of time so we recommend one of the. Transfer Learning 11 Topics 12 31 Introduction to Transfer Learning 13 32 Introduction to PyTorch 14 33 Fine-tuning transformers with PyTorch. Practical Introduction to Natural Language Processing.

Immersive Learning with Guided Hands-On Exercises Cloud Labs. It includes various distillation techniques from both NLP and CV field and provides an easy-to-use distillation framework which allows users to quickly experiment with the state-of-the-art distillation methods to compress the model with a relatively small sacrifice in the. An easy-to-use wrapper library for the Transformers library.

Datasets is a lightweight library providing two main features. 2020325 Chinese ELECTRA-smallbase. Natural Language Processing Tutorial for Deep Learning Researchers - GitHub - graykodenlp-tutorial.

Language models generate probabilities by training on text corpora in one or many languages. Project Management 25 See all Courses. Pattern - A web mining module.

More than 83 million people use GitHub to discover fork and contribute to over 200 million projects. Given such a sequence of length m a language model assigns a probability to the whole sequence. One-liners to download and pre-process any of the major public datasets text datasets in 467 languages and dialects image datasets audio datasets etc provided on the HuggingFace Datasets HubWith a simple command like squad_dataset.

Transformers were rst used in auto-regressive models following early sequence-to-sequence models 44 generating output tokens one by one. State-of-the-Art Natural Language Processing author Wolf Thomas and Debut Lysandre and Sanh Victor and Chaumond Julien and Delangue Clement and Moi Anthony and Cistac Pierric and Rault Tim and Louf Remi and Funtowicz Morgan and Davison Joe and Shleifer Sam and von Platen Patrick. Pytext - A natural language modeling framework based on PyTorch.

Stands on the giant shoulders of NLTK and Pattern and. You can run these notebooks on cloud platforms like Google Colab or your local machine. 10 Install Github Desktop and create Github Repositorymp4.

These models can be applied on. Natural language processing NLP is a field of computer science that studies how computers and humans interact. SpaCy is a library for advanced Natural Language Processing in Python and Cython.

为了解决这一问题CMU联合Google Brain在2019年1月推出的一篇新论文Transformer-XLAttentive Language Models beyond a Fixed-Length Context同时结合了RNN序列建模和Transformer自注意力机制的优点在输入数据的每个段上使用Transformer的注意力模块并使用循环机制来学习连续段. Natural Language Understanding with BERT 15 Topics 16 41 Introduction to BERT. You can use N language models one per language to score your text.

Transformers have advanced the field of natural language processing NLP on a variety of important tasks. More than 83 million people use GitHub to discover fork and contribute to over 200 million projects.

Nlp Tutorial Creating Question Answering System Using Bert Squad On Colab Tpu Nlp Language Classification Google Cloud Storage

Mohammad Sabik Irbaz

Which Are The Best Nlp Libraries Available For Production In Go Quora

Mohammad Sabik Irbaz

Transformers State Of The Art Natural Language Processing For Pytorch And Tensorflow 2 0 Huggingface Tran Machine Learning Being Used Quotes Transformers

Pin On Nlp Natural Language Processing Computational Linguistics Dlnlp Deep Learning Nlp

Vibhor Agarwal Vibhoragarwall Twitter

Bert A Deeper Dive Sentiment Analysis Question And Answer Data Science

Why Do We Use Word Embeddings In Nlp Nlp Words Vocabulary Words

Hindi Language Github Topics Github

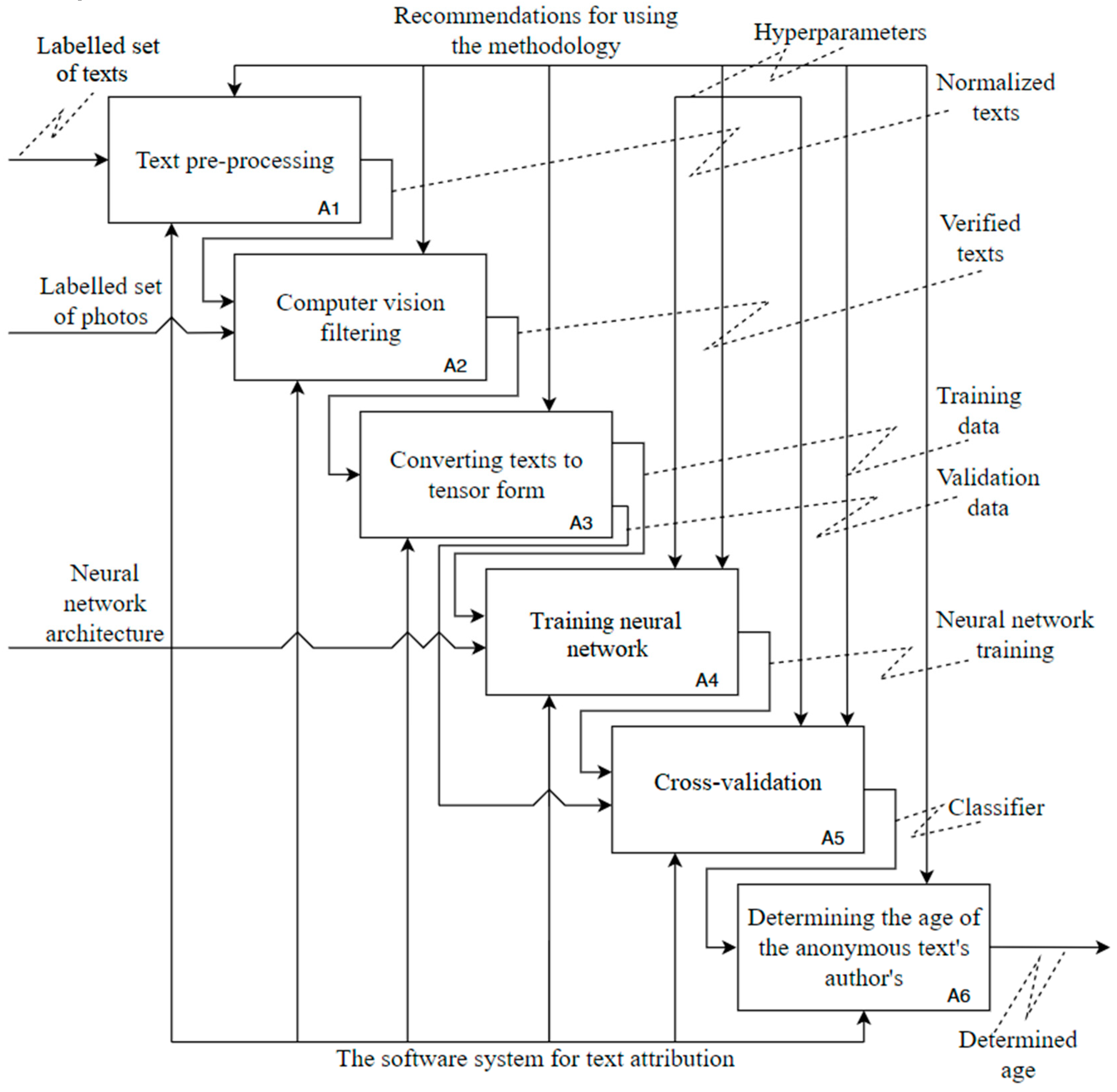

Information Free Full Text Determining The Age Of The Author Of The Text Based On Deep Neural Network Models Html

Ai And Machine Learning Pg Program

Mohammad Sabik Irbaz

Pin On Nlp

Ngp Data Engineer For A Food Delivery Project At Netguru Poland Remote Ai Jobs Net

Github Skyfe79 Awesome My Awesome From The Starred Repos

Mohammad Sabik Irbaz